![]()

It is an exciting time we are in nowadays with the latest development in cloud-based voice services that facilitate many activities ranging from asking a simple question, making a phone call, or to turn on a light in the kitchen, totally hand-free. It is fascinating for first time user when he/she asks something like “Is it going to rain today?”, and gets back an answer like “It probably won’t rain in New York today. There’s only a 25% chance.”, in very natural human language form. This is made possible by cloud based architecture framework created by Amazon Alexa, Google Home Assistance, and Microsoft Cortana, just to mention a few.

It is an exciting time we are in nowadays with the latest development in cloud-based voice services that facilitate many activities ranging from asking a simple question, making a phone call, or to turn on a light in the kitchen, totally hand-free. It is fascinating for first time user when he/she asks something like “Is it going to rain today?”, and gets back an answer like “It probably won’t rain in New York today. There’s only a 25% chance.”, in very natural human language form. This is made possible by cloud based architecture framework created by Amazon Alexa, Google Home Assistance, and Microsoft Cortana, just to mention a few.

The architecture framework makes it relatively painless for people to build their own voice activated app. Today I will discuss how you can build an Alexa Skill, in a few easy steps, using the Alexa Skill Kit (ASK) and a Lambda Function running in the Amazon Web Services (AWS). The Alexa development environment is very dynamic and the developer console user interface seems to be undergoing some growing pain. Please note that I will focus to describe the overall architecture and concept understanding, but not with the tedious step by step screen shot, as they will be legacy very quickly.

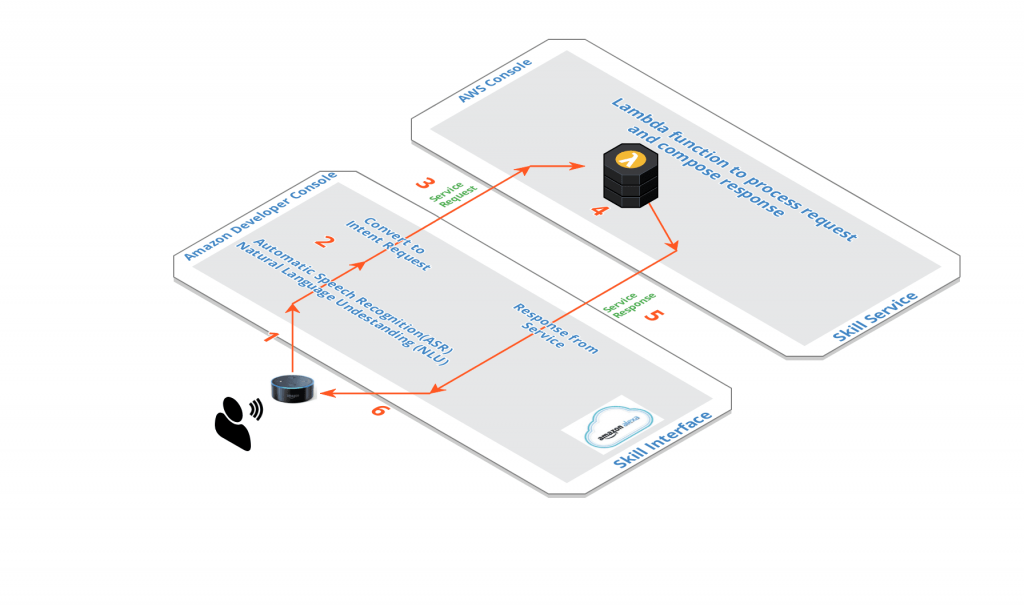

Let’s first understand what is an Alexa skill and it’s processing flow:

An Alexa Skill basically listens to a request from you, send the request to the Amazon cloud for processing, which may trigger a number of activities. For example look up the current weather in your current location, or open a smart door lock at your home, and it will come back to you with a voice response.

Here is a sample processing flow:

- Capture the voice request from the user

- Convert the voice request to a precise request

- Route the request to a back-end service

- Process the request and create a response

- Route the response back to the requester

- Capture the response and convert that to a voice response to the user

What you need to get started?

- A device with a microphone and speaker that is capable of capturing your voice request and say the response. (Instead of a physical device, you can also use the free simulator from Amazon on your computer)

- An account in Amazon Developer Portal where you can build your Alexa skill Interface (Free)

- An account in Amazon Web Service (AWS) where you can build your back-end Lambda Skill Service (Free, just use the free-tiered service)

Let’s build our first skill “Tech Demic” that will return a random quote to the user

So how would the user can launch your skill? here is a template with the conversation we need to build:

The skill should have an intuitive and memorable invocation name, and a bunch of utterances that will simulate how the user may ask for your skill.

First let’s create a new Alexa Skill in the developer portal:

Logon to Amazon Developer Portal and select Amazon Alexa, there are lots of documentation and tutorial here so feel free to explore around. Go to Your Alexa Consoles and you will see a list of your Alexa Skills if you currently have any.

To create a new skill you will need to enter:

- Invocation name: tech demic <you can enter your own prefer invocation name, just make sure to follow the guideline in the page>

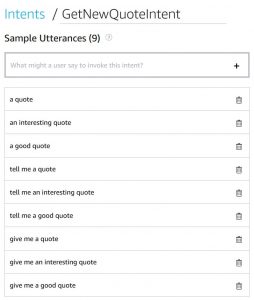

- Intents: GetNewQuoteIntent

- Sample Utterances: enter a few variation on how the user may ask for this intent, this will allow your skill to understand how user may naturally ask for your service.

This is basically all you need to define at this point. Make sure to save and build your skill. Amazon seems to be making lots of changes to their new Alexa Skill Consoles, so you will most likely experience some growing pain/inconsistency while using it.

The only other info you will need to enter is the Endpoint, which will be the ARN address for your lambda function, which you can come back to enter the link address once you have the lambda function created.

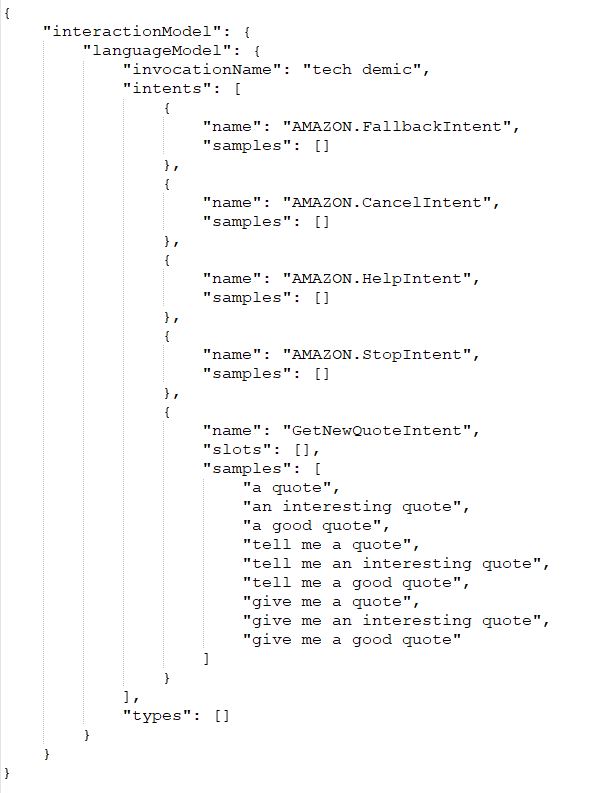

If you click on the JSON Editor, you will see an interaction model JSON object is created with all the information you entered via the console, you may find it easier in the future by updating the json object itself.

Next develop a Lambda function to do the back-end processing:

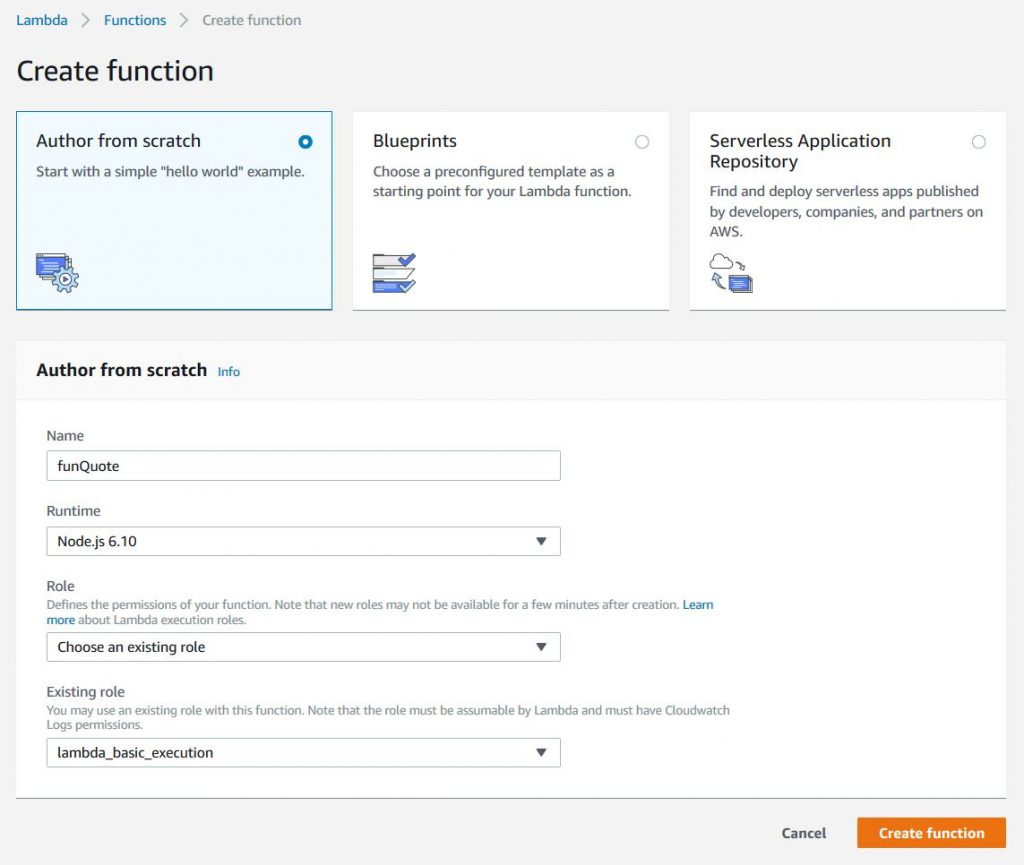

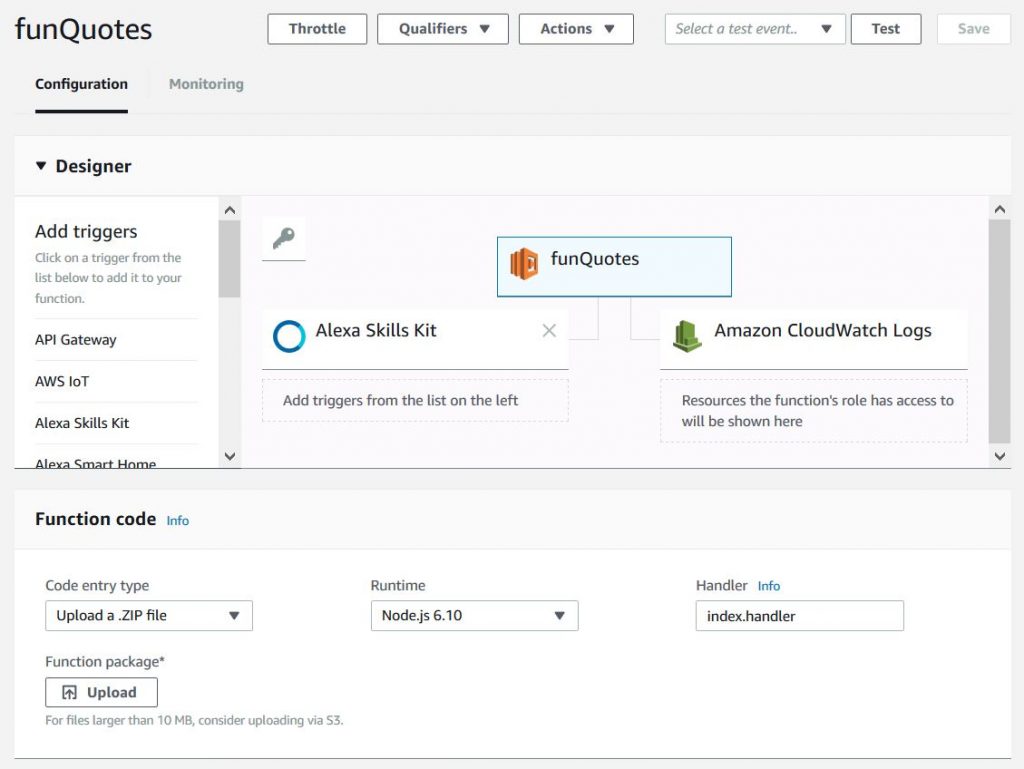

Logon to the Amazon Web Service (AWS) to create a new Lambda function. We will be creating this skill from scratch and will call our new Lambda function “funQuotes”, the runtime should be Node.js 6.10, and you must define an execution role for this function. If you already have one, select the lambda_basic_execution, if don’t already have one, create a new role “lambda_basic_execution”

In case if you are interested, Amazon provides a few common blueprints codes which you can leverage to jump-start your development process.

Next add a trigger to your function, please select Alexa Skills Kit as the trigger. You have the option to further lock down so that only a specific skill can trigger this function, you can enter the Skill ID which starts with amzn1.ask.skill…..

Now we are ready to work on the source code, I find the easiest way is to upload a zip file, this way you can edit your source code on your computer using your own favorite IDE.

We will have 2 source files, index.js and AlexaSkill.js. The source file are based on the fact blueprint sample files, and modified slightly for our purpose. You can download a copy of the source code in my GitHub repository. Once you have the source file uploaded, please remember to save your work.

The source code is pretty easy to understand. Please take a look at the index.js, function handleNewQuoteRequest will do the processing for our intent “GetNewQuoteIntent”, which is to find a random quote from a list of quotes and compose a speech out response. This is where you can further customize your own skill.

Now that our Lambda function is created, you will find the ARN address that starts with arn:aws:lambda:… Please copy the link and now back to the developer console, and paste that as the Endpoint for your skill. Just enter that as the Default Region, you will see that there are options to enter ARN for different global region to get the best performance in the region, we don’t need to define them at this point.

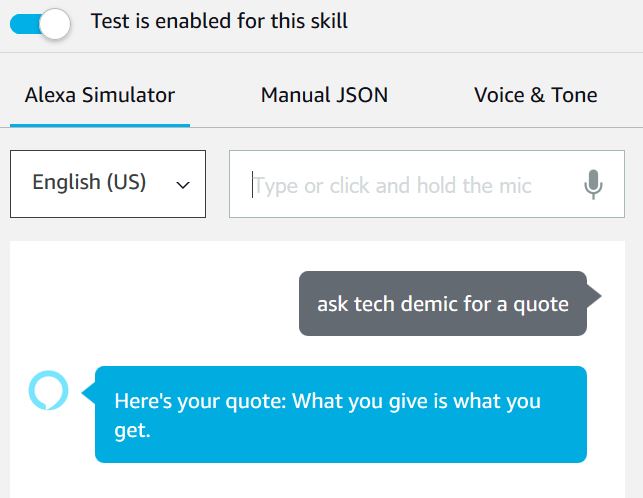

Remember to save and build your skill and we are ready to test. The easiest way is to test it first with the Alexa Simulator, you can say or type in your request: ask tech demic for a quote, and it will respond with the random quote returned from our funQuotes Lambda function.

The test simulator also display the JSON input and JSON output object. You can see that voice command is turned into an “IntentRequest” for “GetNewQuoteIntent”:

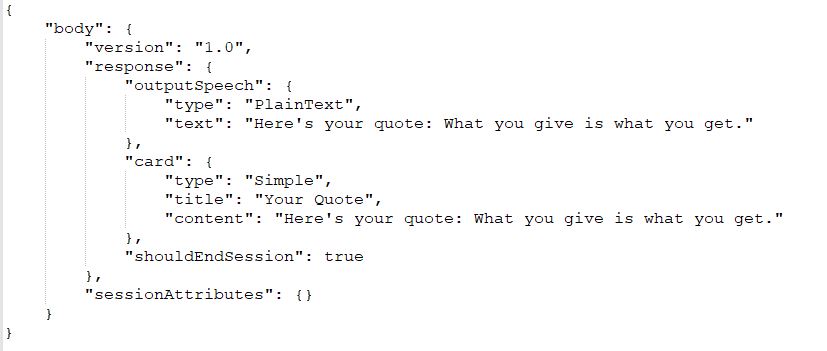

And the response JSON object with the random quote:

Congratulation! you just successfully built and tested your first skill.

Conclusion:

Amazon is definitely working very hard to provide a very simple and powerful architecture framework so that skill developer can focus on the user interface and the business application features instead of the pluming needed for the underlying voice architecture. Together with the many development cloud services provided by AWS, really helps to jump-start and reduce the development time.

Amazon is definitely working very hard to provide a very simple and powerful architecture framework so that skill developer can focus on the user interface and the business application features instead of the pluming needed for the underlying voice architecture. Together with the many development cloud services provided by AWS, really helps to jump-start and reduce the development time.

In general, Amazon continue to grow and improve their Alexa development console and the AWS console. But due to the rapid development, there are many glitches and inconsistency as part of the growing pain. It was specially disappointing to see the “lack” of integration between the developer portal and AWS portal. The developer basically has to constantly switching between the two, and in many cases, copy and pasting very long cryptic links. I believe a “tighter” integration between the two portals (or maybe that it should be combined into one) would help the developer to navigate seamlessly with all their development works. By using developer’s identity and assigned roles, Amazon should be able to come up with an integrated development environment that include both Developer Portal and AWS portal, I hope.

In general, Amazon continue to grow and improve their Alexa development console and the AWS console. But due to the rapid development, there are many glitches and inconsistency as part of the growing pain. It was specially disappointing to see the “lack” of integration between the developer portal and AWS portal. The developer basically has to constantly switching between the two, and in many cases, copy and pasting very long cryptic links. I believe a “tighter” integration between the two portals (or maybe that it should be combined into one) would help the developer to navigate seamlessly with all their development works. By using developer’s identity and assigned roles, Amazon should be able to come up with an integrated development environment that include both Developer Portal and AWS portal, I hope.